Hi everyone,

This is going to be a small get-together with LangChain4j. We’ll build a simple CLI-based chatbot that can:

- Remember the conversation

- Search the internet if required to get time accurate answers.

This guide contains two parts:

A simpler implementation using high-level abstractions provided by LangChain4j.A deeper dive into how these abstractions might work under the hood.A simpler implementation using high-level abstractions provided by LangChain4j. A deeper dive into how these abstractions might work under the hood.A simpler implementation using high-level abstractions provided by LangChain4j. A deeper dive into how these abstractions might work under the hood.

Enter fullscreen mode Exit fullscreen mode

Link to full code: https://github.com/karan-79/cli-chatbot-langchain4j

So lets get into it.

What will we use

- OpenAI as the LLM provider (you can use any other provider or even run a local model).

- LangChain4j, the Java library that simplifies working with LLMs.

- Tavily, a tool that makes extracting useful information from webpages easier. Sign up and get a free API key here: https://tavily.com/

Setup.

- Open your IDE, setup a Java project using maven or build tool of your choice (I am using Intellij and maven)

- Add the following dependencies to your pom.xml:

<span><dependency></span><span><groupId></span>com.fasterxml.jackson.core<span></groupId></span><span><artifactId></span>jackson-databind<span></artifactId></span><span><version></span>2.15.2<span></version></span><span></dependency></span><span><dependency></span><span><groupId></span>dev.langchain4j<span></groupId></span><span><artifactId></span>langchain4j-web-search-engine-tavily<span></artifactId></span><span><version></span>1.0.0-beta1<span></version></span><span></dependency></span><span><dependency></span><span><groupId></span>dev.langchain4j<span></groupId></span><span><artifactId></span>langchain4j<span></artifactId></span><span><version></span>1.0.0-beta1<span></version></span><span></dependency></span><span><dependency></span><span><groupId></span>dev.langchain4j<span></groupId></span><span><artifactId></span>langchain4j-open-ai<span></artifactId></span><span><version></span>1.0.0-beta1<span></version></span><span></dependency></span><span><dependency></span> <span><groupId></span>com.fasterxml.jackson.core<span></groupId></span> <span><artifactId></span>jackson-databind<span></artifactId></span> <span><version></span>2.15.2<span></version></span> <span></dependency></span> <span><dependency></span> <span><groupId></span>dev.langchain4j<span></groupId></span> <span><artifactId></span>langchain4j-web-search-engine-tavily<span></artifactId></span> <span><version></span>1.0.0-beta1<span></version></span> <span></dependency></span> <span><dependency></span> <span><groupId></span>dev.langchain4j<span></groupId></span> <span><artifactId></span>langchain4j<span></artifactId></span> <span><version></span>1.0.0-beta1<span></version></span> <span></dependency></span> <span><dependency></span> <span><groupId></span>dev.langchain4j<span></groupId></span> <span><artifactId></span>langchain4j-open-ai<span></artifactId></span> <span><version></span>1.0.0-beta1<span></version></span> <span></dependency></span><dependency> <groupId>com.fasterxml.jackson.core</groupId> <artifactId>jackson-databind</artifactId> <version>2.15.2</version> </dependency> <dependency> <groupId>dev.langchain4j</groupId> <artifactId>langchain4j-web-search-engine-tavily</artifactId> <version>1.0.0-beta1</version> </dependency> <dependency> <groupId>dev.langchain4j</groupId> <artifactId>langchain4j</artifactId> <version>1.0.0-beta1</version> </dependency> <dependency> <groupId>dev.langchain4j</groupId> <artifactId>langchain4j-open-ai</artifactId> <version>1.0.0-beta1</version> </dependency>

Enter fullscreen mode Exit fullscreen mode

Using out of the box abstractions

Step 1: Build a ChatLanguageModel.

ChatLanguageModelis a language level API that sort of abstracts the API calls to the LLM.

In simple terms its an Interface which have different implementations for different LLM providers, and since we’re usinglangchain4j-open-aiso will use Open AI model’s implementationOpenAiChatModelprovided bylangchain4j-open-ai.

<span>ChatLanguageModel</span> <span>model</span> <span>=</span> <span>OpenAiChatModel</span><span>.</span><span>builder</span><span>()</span><span>.</span><span>apiKey</span><span>(</span><span>System</span><span>.</span><span>getenv</span><span>(</span><span>"OPENAI_API_KEY"</span><span>))</span><span>.</span><span>modelName</span><span>(</span><span>OpenAiChatModelName</span><span>.</span><span>GPT_4_O_MINI</span><span>)</span><span>.</span><span>build</span><span>();</span><span>ChatLanguageModel</span> <span>model</span> <span>=</span> <span>OpenAiChatModel</span><span>.</span><span>builder</span><span>()</span> <span>.</span><span>apiKey</span><span>(</span><span>System</span><span>.</span><span>getenv</span><span>(</span><span>"OPENAI_API_KEY"</span><span>))</span> <span>.</span><span>modelName</span><span>(</span><span>OpenAiChatModelName</span><span>.</span><span>GPT_4_O_MINI</span><span>)</span> <span>.</span><span>build</span><span>();</span>ChatLanguageModel model = OpenAiChatModel.builder() .apiKey(System.getenv("OPENAI_API_KEY")) .modelName(OpenAiChatModelName.GPT_4_O_MINI) .build();

Enter fullscreen mode Exit fullscreen mode

Here we use builder available on OpenAiChatModel and provide some configuration stuff, like the model we want to use, the API key.

Now, we have a model ready, we can invoke it by sending ChatMessage

ChatMessageis also a language level API that helps ease the communication for chat completions with LLM. There are various types ofChatMessages messages check more here

Step 2: Now we declare our AI chat service.

The concept is, To have an AI powered (non-agentic) app, you need to have a structured way to communicate your needs to LLM and have it give relevant answers. which involves:

- Formatting inputs for the LLM.

- Ensuring the LLM responds in a specific format.

- Parsing responses (e.g., from custom tools).

So one way is to have the consumer (you) define that structure. Langchain (python) introduced a concept of

Chainswhich lets you define a flow where your input goes through a bunch of chains invoking LLM, custom tools, parsers etc. and at the end you get your desired result.

LangChain4j do have the concept of Chains but it encourages a different solution which they call AI Services

Idea is to abstract away all the complex manual structuring of flows and give a simple declarative interface, And everything is getting taken care of by LangChain4j

So we create our own service,

<span>public</span> <span>interface</span> <span>ChatService</span> <span>{</span><span>String</span> <span>chat</span><span>(</span><span>String</span> <span>userMessage</span><span>);</span><span>}</span><span>public</span> <span>interface</span> <span>ChatService</span> <span>{</span> <span>String</span> <span>chat</span><span>(</span><span>String</span> <span>userMessage</span><span>);</span> <span>}</span>public interface ChatService { String chat(String userMessage); }

Enter fullscreen mode Exit fullscreen mode

Now, we can see that ChatService has a method chat that deals with String input and String output.

And here we build our service by telling langchain4j our desired structure:

<span>ChatService</span> <span>service</span> <span>=</span> <span>AiServices</span><span>.</span><span>builder</span><span>(</span><span>ChatService</span><span>.</span><span>class</span><span>)</span><span>.</span><span>chatLanguageModel</span><span>(</span><span>model</span><span>)</span><span>.</span><span>build</span><span>();</span><span>ChatService</span> <span>service</span> <span>=</span> <span>AiServices</span><span>.</span><span>builder</span><span>(</span><span>ChatService</span><span>.</span><span>class</span><span>)</span> <span>.</span><span>chatLanguageModel</span><span>(</span><span>model</span><span>)</span> <span>.</span><span>build</span><span>();</span>ChatService service = AiServices.builder(ChatService.class) .chatLanguageModel(model) .build();

Enter fullscreen mode Exit fullscreen mode

Now, our service is ready we can invoke the it by calling the chat method we specified.

<span>service</span><span>.</span><span>chat</span><span>(</span><span>"Hi how are you"</span><span>);</span><span>// I'm just a computer program, but I'm here and ready to help you! How can // I assist you today?</span><span>service</span><span>.</span><span>chat</span><span>(</span><span>"Hi how are you"</span><span>);</span> <span>// I'm just a computer program, but I'm here and ready to help you! How can // I assist you today?</span>service.chat("Hi how are you"); // I'm just a computer program, but I'm here and ready to help you! How can // I assist you today?

Enter fullscreen mode Exit fullscreen mode

Langchain4j is calling the OpenAI API’s but look how we didn’t had to deal with all the API level responses.

If we change our interface. we get still get the desired response in desired structure. here we can see more supported structures

<span>interface</span> <span>ChatService</span> <span>{</span><span>AiMessage</span> <span>chat</span><span>(</span><span>String</span> <span>message</span><span>);</span><span>}</span><span>AiMessage</span> <span>aiMessage</span> <span>=</span> <span>service</span><span>.</span><span>chat</span><span>(</span><span>"Hi how are you"</span><span>);</span><span>aiMessage</span><span>.</span><span>text</span><span>();</span><span>/** Hello! I'm just a program, so I don't have feelings, but I'm here and ready to help you. How can I assist you today? */</span><span>interface</span> <span>ChatService</span> <span>{</span> <span>AiMessage</span> <span>chat</span><span>(</span><span>String</span> <span>message</span><span>);</span> <span>}</span> <span>AiMessage</span> <span>aiMessage</span> <span>=</span> <span>service</span><span>.</span><span>chat</span><span>(</span><span>"Hi how are you"</span><span>);</span> <span>aiMessage</span><span>.</span><span>text</span><span>();</span> <span>/** Hello! I'm just a program, so I don't have feelings, but I'm here and ready to help you. How can I assist you today? */</span>interface ChatService { AiMessage chat(String message); } AiMessage aiMessage = service.chat("Hi how are you"); aiMessage.text(); /** Hello! I'm just a program, so I don't have feelings, but I'm here and ready to help you. How can I assist you today? */

Enter fullscreen mode Exit fullscreen mode

Step 3: Lets add some interaction

We enable a short console based input from user and send it to LLM and print the response

<span>var</span> <span>scanner</span> <span>=</span> <span>new</span> <span>Scanner</span><span>(</span><span>System</span><span>.</span><span>in</span><span>);</span><span>while</span> <span>(</span><span>true</span><span>)</span> <span>{</span><span>try</span> <span>{</span><span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"User: "</span><span>);</span><span>var</span> <span>userInput</span> <span>=</span> <span>scanner</span><span>.</span><span>nextLine</span><span>();</span><span>if</span><span>(</span><span>List</span><span>.</span><span>of</span><span>(</span><span>"q"</span><span>,</span> <span>"quit"</span><span>,</span> <span>"exit"</span><span>).</span><span>contains</span><span>(</span><span>userInput</span><span>.</span><span>trim</span><span>())</span> <span>{</span><span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"Assistant: Bye Bye"</span><span>);</span><span>break</span><span>;</span><span>}</span><span>var</span> <span>answer</span> <span>=</span> <span>service</span><span>.</span><span>chat</span><span>(</span><span>userInput</span><span>);</span><span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Assistant: "</span> <span>+</span> <span>answer</span><span>);</span><span>}</span> <span>catch</span> <span>(</span><span>Exception</span> <span>e</span><span>)</span> <span>{</span><span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Something went wrong"</span><span>);</span><span>break</span><span>;</span><span>}</span><span>}</span><span>var</span> <span>scanner</span> <span>=</span> <span>new</span> <span>Scanner</span><span>(</span><span>System</span><span>.</span><span>in</span><span>);</span> <span>while</span> <span>(</span><span>true</span><span>)</span> <span>{</span> <span>try</span> <span>{</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"User: "</span><span>);</span> <span>var</span> <span>userInput</span> <span>=</span> <span>scanner</span><span>.</span><span>nextLine</span><span>();</span> <span>if</span><span>(</span><span>List</span><span>.</span><span>of</span><span>(</span><span>"q"</span><span>,</span> <span>"quit"</span><span>,</span> <span>"exit"</span><span>).</span><span>contains</span><span>(</span><span>userInput</span><span>.</span><span>trim</span><span>())</span> <span>{</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"Assistant: Bye Bye"</span><span>);</span> <span>break</span><span>;</span> <span>}</span> <span>var</span> <span>answer</span> <span>=</span> <span>service</span><span>.</span><span>chat</span><span>(</span><span>userInput</span><span>);</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Assistant: "</span> <span>+</span> <span>answer</span><span>);</span> <span>}</span> <span>catch</span> <span>(</span><span>Exception</span> <span>e</span><span>)</span> <span>{</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Something went wrong"</span><span>);</span> <span>break</span><span>;</span> <span>}</span> <span>}</span>var scanner = new Scanner(System.in); while (true) { try { System.out.print("User: "); var userInput = scanner.nextLine(); if(List.of("q", "quit", "exit").contains(userInput.trim()) { System.out.print("Assistant: Bye Bye"); break; } var answer = service.chat(userInput); System.out.println("Assistant: " + answer); } catch (Exception e) { System.out.println("Something went wrong"); break; } }

Enter fullscreen mode Exit fullscreen mode

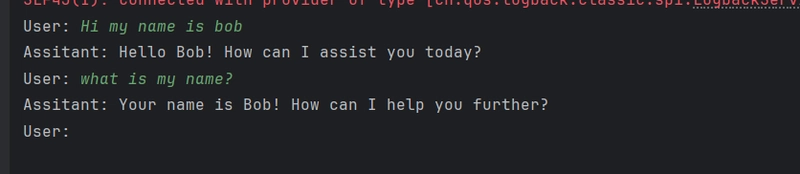

here we can see the output:

Now we have the interface and are able to send messages, but we lack something.

Each input is independent. Hence, it appears chat bot does not remember previous interactions.

Lets fix that.

Step 4: Adding memory to our chatbot

LLMs are stateless—they process one input at a time without remembering previous interactions. To enable conversational flow, we need to send the conversation history along with the current message.

So to do so in langchain4j we have concept of Chat Memory

Chat Memory is different from just sending the whole history. Because when history grows large you need to perform some optimizations to not exceed the context window of the LLM model

learn more Chat memory

LangChain4j provides MessageWindowChatMemory class which will store our interactions with the LLM. we provide it in our AI service and it automatically takes care of messages. By default it stores the interactions in memory but can be made to persist them also

<span>ChatService</span> <span>service</span> <span>=</span> <span>AiServices</span><span>.</span><span>builder</span><span>(</span><span>ChatService</span><span>.</span><span>class</span><span>)</span><span>.</span><span>chatLanguageModel</span><span>(</span><span>model</span><span>)</span><span>.</span><span>chatMemory</span><span>(</span><span>MessageWindowChatMemory</span><span>.</span><span>withMaxMessages</span><span>(</span><span>20</span><span>))</span><span>.</span><span>build</span><span>();</span><span>ChatService</span> <span>service</span> <span>=</span> <span>AiServices</span><span>.</span><span>builder</span><span>(</span><span>ChatService</span><span>.</span><span>class</span><span>)</span> <span>.</span><span>chatLanguageModel</span><span>(</span><span>model</span><span>)</span> <span>.</span><span>chatMemory</span><span>(</span><span>MessageWindowChatMemory</span><span>.</span><span>withMaxMessages</span><span>(</span><span>20</span><span>))</span> <span>.</span><span>build</span><span>();</span>ChatService service = AiServices.builder(ChatService.class) .chatLanguageModel(model) .chatMemory(MessageWindowChatMemory.withMaxMessages(20)) .build();

Enter fullscreen mode Exit fullscreen mode

Here we used MessageWindowChatMemory.withMaxMessages(20) which configures a MessageWindowChatMemory instance that will only store recent 20 messages.

There are a couple of techniques to enable memory for LLM like:

- Summarizing of history.

- Selective eviction (removal) of messages from history.

- Eviction of oldest message in history. For our use case we just use a simple one that will only keep upto 20 messages in a conversation with LLM and evict the oldest one as count increases from 20.

So, now we can see it in action:

Step 5: Adding internet search ability to our chat.

Since LLMs are trained on data upto a certain date. So they do not know latest knowledge. So, we use something called Tool calling

Tool calling

LLM providers such as Open AI gives feasibility of tool calling.

What essentially happen is, you tell the LLM in the conversation that you have some tools (functions in your code) with all their descriptions (what they do, what is the return type, what arguments they take) and then LLM can request the execution of tools (your functions) to get more accurate context or information. which is just a normal response and its our responsibility to ensure if LLM asked us to run a tool (function) with required arguments and then send back the results to process a proper response. See the above image from open ai

Find more info: openai , langchain4j tools

Langchain4j provides a pretty decent way to call your java methods as tools.

@Tool annotation makes a proper tool specification for your methods inside class as required by chat API from LLM providers like Open AI.

Below is one example how you can achieve this

<span>public</span> <span>class</span> <span>Tools</span> <span>{</span><span>@Tool</span><span>(</span><span>"Searches the internet for relevant information for given input query"</span><span>)</span><span>public</span> <span>String</span> <span>searchInternet</span><span>(</span><span>String</span> <span>query</span><span>)</span> <span>{</span><span>// the implementaion</span><span>return</span> <span>""</span><span>;</span><span>}</span><span>}</span><span>public</span> <span>class</span> <span>Tools</span> <span>{</span> <span>@Tool</span><span>(</span><span>"Searches the internet for relevant information for given input query"</span><span>)</span> <span>public</span> <span>String</span> <span>searchInternet</span><span>(</span><span>String</span> <span>query</span><span>)</span> <span>{</span> <span>// the implementaion</span> <span>return</span> <span>""</span><span>;</span> <span>}</span> <span>}</span>public class Tools { @Tool("Searches the internet for relevant information for given input query") public String searchInternet(String query) { // the implementaion return ""; } }

Enter fullscreen mode Exit fullscreen mode

Which then can be provided to LLM in API calls via LangChain4j’s AI Service:

<span>ChatService</span> <span>service</span> <span>=</span> <span>AiServices</span><span>.</span><span>builder</span><span>(</span><span>ChatService</span><span>.</span><span>class</span><span>)</span><span>.</span><span>chatLanguageModel</span><span>(</span><span>model</span><span>)</span><span>.</span><span>chatMemory</span><span>(</span><span>MessageWindowChatMemory</span><span>.</span><span>withMaxMessages</span><span>(</span><span>20</span><span>))</span><span>.</span><span>tools</span><span>(</span><span>new</span> <span>Tools</span><span>())</span> <span>// here we instantiate the Tools class which then can be read by the LangChain4j</span><span>.</span><span>build</span><span>();</span><span>ChatService</span> <span>service</span> <span>=</span> <span>AiServices</span><span>.</span><span>builder</span><span>(</span><span>ChatService</span><span>.</span><span>class</span><span>)</span> <span>.</span><span>chatLanguageModel</span><span>(</span><span>model</span><span>)</span> <span>.</span><span>chatMemory</span><span>(</span><span>MessageWindowChatMemory</span><span>.</span><span>withMaxMessages</span><span>(</span><span>20</span><span>))</span> <span>.</span><span>tools</span><span>(</span><span>new</span> <span>Tools</span><span>())</span> <span>// here we instantiate the Tools class which then can be read by the LangChain4j</span> <span>.</span><span>build</span><span>();</span>ChatService service = AiServices.builder(ChatService.class) .chatLanguageModel(model) .chatMemory(MessageWindowChatMemory.withMaxMessages(20)) .tools(new Tools()) // here we instantiate the Tools class which then can be read by the LangChain4j .build();

Enter fullscreen mode Exit fullscreen mode

And now LangChain4j automatically generates a properly formatted description of your tool, which can be sent to Open AI’s APIs.

Now that we have setup a tool, let’s implement what we want the tool to do.

Tavily usage

We are using an API from Tavily.

Tavily saves us from all the cumbersome efforts of extracting a LLM ready information from webpages of a web search. And they also do more content optimizations that make the content somewhat rich for LLM.

We’ll use a Tavily API abstraction provided by LangChain4j via langchain4j-web-search-engine-tavily. Just some language level API :).

<span>@Tool</span><span>(</span><span>"Searches the internet for relevant information for given input query"</span><span>)</span><span>public</span> <span>List</span><span><</span><span>WebSearchOrganicResult</span><span>></span> <span>searchInternet</span><span>(</span><span>String</span> <span>query</span><span>)</span> <span>{</span><span>// we make a client for tavily </span><span>TavilyWebSearchEngine</span> <span>webSearchEngine</span> <span>=</span> <span>TavilyWebSearchEngine</span><span>.</span><span>builder</span><span>()</span><span>.</span><span>apiKey</span><span>(</span><span>System</span><span>.</span><span>getenv</span><span>(</span><span>"TAVILY_API_KEY"</span><span>))</span><span>.</span><span>build</span><span>();</span><span>// we invoke the search passing 'query'</span><span>var</span> <span>webSearchResults</span><span>=</span> <span>webSearchEngine</span><span>.</span><span>search</span><span>(</span><span>query</span><span>);</span><span>// since there can be many results from different webpages so we'll only pick 4</span><span>return</span> <span>webSearchResults</span><span>.</span><span>results</span><span>().</span><span>subList</span><span>(</span><span>0</span><span>,</span> <span>Math</span><span>.</span><span>min</span><span>(</span><span>4</span><span>,</span> <span>webSearchResults</span><span>.</span><span>results</span><span>().</span><span>size</span><span>()));</span><span>}</span><span>@Tool</span><span>(</span><span>"Searches the internet for relevant information for given input query"</span><span>)</span> <span>public</span> <span>List</span><span><</span><span>WebSearchOrganicResult</span><span>></span> <span>searchInternet</span><span>(</span><span>String</span> <span>query</span><span>)</span> <span>{</span> <span>// we make a client for tavily </span> <span>TavilyWebSearchEngine</span> <span>webSearchEngine</span> <span>=</span> <span>TavilyWebSearchEngine</span><span>.</span><span>builder</span><span>()</span> <span>.</span><span>apiKey</span><span>(</span><span>System</span><span>.</span><span>getenv</span><span>(</span><span>"TAVILY_API_KEY"</span><span>))</span> <span>.</span><span>build</span><span>();</span> <span>// we invoke the search passing 'query'</span> <span>var</span> <span>webSearchResults</span><span>=</span> <span>webSearchEngine</span><span>.</span><span>search</span><span>(</span><span>query</span><span>);</span> <span>// since there can be many results from different webpages so we'll only pick 4</span> <span>return</span> <span>webSearchResults</span><span>.</span><span>results</span><span>().</span><span>subList</span><span>(</span><span>0</span><span>,</span> <span>Math</span><span>.</span><span>min</span><span>(</span><span>4</span><span>,</span> <span>webSearchResults</span><span>.</span><span>results</span><span>().</span><span>size</span><span>()));</span> <span>}</span>@Tool("Searches the internet for relevant information for given input query") public List<WebSearchOrganicResult> searchInternet(String query) { // we make a client for tavily TavilyWebSearchEngine webSearchEngine = TavilyWebSearchEngine.builder() .apiKey(System.getenv("TAVILY_API_KEY")) .build(); // we invoke the search passing 'query' var webSearchResults= webSearchEngine.search(query); // since there can be many results from different webpages so we'll only pick 4 return webSearchResults.results().subList(0, Math.min(4, webSearchResults.results().size())); }

Enter fullscreen mode Exit fullscreen mode

Here we just wrote a usage of tavily API.

The query argument will get the value what the LLM requires to search so we just channel it to tavily.

and lastly we just return the results.

Now we are all set. let’s chat!

So we got a proper response on latest information, Also look the Tool we defined have List<WebSearchOrganicResult> return type, Langchain4j ensure the results of the tool (function) are properly formatted and sent back to llm.

Enable langchain4j logging

To see more things in action enable logs:

langchain4j logs using SL4J so lets a dependency for that in pom.xml:

<span><dependency></span><span><groupId></span>ch.qos.logback<span></groupId></span><span><artifactId></span>logback-classic<span></artifactId></span><span><version></span>1.5.8<span></version></span><span></dependency></span><span><dependency></span> <span><groupId></span>ch.qos.logback<span></groupId></span> <span><artifactId></span>logback-classic<span></artifactId></span> <span><version></span>1.5.8<span></version></span> <span></dependency></span><dependency> <groupId>ch.qos.logback</groupId> <artifactId>logback-classic</artifactId> <version>1.5.8</version> </dependency>

Enter fullscreen mode Exit fullscreen mode

now on Model enable logs for requests and responses:

<span>ChatLanguageModel</span> <span>model</span> <span>=</span> <span>OpenAiChatModel</span><span>.</span><span>builder</span><span>()</span><span>.</span><span>apiKey</span><span>(</span><span>System</span><span>.</span><span>getenv</span><span>(</span><span>"OPENAI_API_KEY"</span><span>))</span><span>.</span><span>logRequests</span><span>(</span><span>true</span><span>)</span><span>.</span><span>logResponses</span><span>(</span><span>true</span><span>)</span><span>.</span><span>modelName</span><span>(</span><span>OpenAiChatModelName</span><span>.</span><span>GPT_4_O_MINI</span><span>)</span><span>.</span><span>build</span><span>();</span><span>ChatLanguageModel</span> <span>model</span> <span>=</span> <span>OpenAiChatModel</span><span>.</span><span>builder</span><span>()</span> <span>.</span><span>apiKey</span><span>(</span><span>System</span><span>.</span><span>getenv</span><span>(</span><span>"OPENAI_API_KEY"</span><span>))</span> <span>.</span><span>logRequests</span><span>(</span><span>true</span><span>)</span> <span>.</span><span>logResponses</span><span>(</span><span>true</span><span>)</span> <span>.</span><span>modelName</span><span>(</span><span>OpenAiChatModelName</span><span>.</span><span>GPT_4_O_MINI</span><span>)</span> <span>.</span><span>build</span><span>();</span>ChatLanguageModel model = OpenAiChatModel.builder() .apiKey(System.getenv("OPENAI_API_KEY")) .logRequests(true) .logResponses(true) .modelName(OpenAiChatModelName.GPT_4_O_MINI) .build();

Enter fullscreen mode Exit fullscreen mode

lets try again:

We see that lanchain4j is sending out information about our method that runs tavily

Now we got our response back

![图片[1]-Creating a simple web search enabled chat bot using LangChain4j - 拾光赋-拾光赋](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2F6oeymkna406by18gp6ec.png)

See how we get tool_calls in response which is understood as a Tool call request by LangChain4j. And it executes our method and see the results logged out.

Now we see another request happened to LLM:

![图片[2]-Creating a simple web search enabled chat bot using LangChain4j - 拾光赋-拾光赋](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Fyt6if01vpxrt8030aa93.png)

Here we see LangChain4j sent another request to LLM on its own. because now it had gotten tool response and it attaches the results of tool call in the messages with role = tool. Thus providing the more context about what user had asked about.

And finally, we get our response back with LLM responding

There you have it, a simple chat bot with search capabilities :).

Getting under the surface

Here we’ll go little bit under the surface of LangChain4j and try to understand how it may be working to enable the magic we saw.

We start and create a model first:

<span>ChatLanguageModel</span> <span>model</span> <span>=</span> <span>OpenAiChatModel</span><span>.</span><span>builder</span><span>()</span><span>.</span><span>apiKey</span><span>(</span><span>System</span><span>.</span><span>getenv</span><span>(</span><span>"OPENAI_API_KEY"</span><span>))</span><span>.</span><span>modelName</span><span>(</span><span>OpenAiChatModelName</span><span>.</span><span>GPT_4_O_MINI</span><span>)</span><span>.</span><span>build</span><span>();</span><span>ChatLanguageModel</span> <span>model</span> <span>=</span> <span>OpenAiChatModel</span><span>.</span><span>builder</span><span>()</span> <span>.</span><span>apiKey</span><span>(</span><span>System</span><span>.</span><span>getenv</span><span>(</span><span>"OPENAI_API_KEY"</span><span>))</span> <span>.</span><span>modelName</span><span>(</span><span>OpenAiChatModelName</span><span>.</span><span>GPT_4_O_MINI</span><span>)</span> <span>.</span><span>build</span><span>();</span>ChatLanguageModel model = OpenAiChatModel.builder() .apiKey(System.getenv("OPENAI_API_KEY")) .modelName(OpenAiChatModelName.GPT_4_O_MINI) .build();

Enter fullscreen mode Exit fullscreen mode

Lets understand memory

As we know, LLMs are stateless. We ought to give the whole history of the chat to emulate a conversational interaction.

So MessageWindowChatMemory class we used by creating instance like this MessageWindowChatMemory.withMaxMessages(20), is just a holder that keeps track of the messages that are to be sent in a conversation.

Looking inside the class we see:

-

MessageWindowChatMemorycontains aChatMemoryStorewhich further have its implementationInMemoryChatMemoryStorewhich maintains aConcurrentHashMap - this

ConcurrentHashMapholds aList<ChatMessage>thats where our history goes - the key in the

ConcurrentHashMapis to distinguish conversations. In our case we only had one so we ended with a default behavior

So technically we can maintain a our own List<ChatMessages>, right? Yes

<span>var</span> <span>chatMemory</span> <span>=</span> <span>new</span> <span>ArrayList</span><span><</span><span>ChatMessage</span><span>>();</span><span>var</span> <span>chatMemory</span> <span>=</span> <span>new</span> <span>ArrayList</span><span><</span><span>ChatMessage</span><span>>();</span>var chatMemory = new ArrayList<ChatMessage>();

Enter fullscreen mode Exit fullscreen mode

Now our conversation code will look like this:

<span>while</span> <span>(</span><span>true</span><span>)</span> <span>{</span><span>try</span> <span>{</span><span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"User: "</span><span>);</span><span>var</span> <span>userInput</span> <span>=</span> <span>scanner</span><span>.</span><span>nextLine</span><span>();</span><span>if</span> <span>(</span><span>List</span><span>.</span><span>of</span><span>(</span><span>"q"</span><span>,</span> <span>"quit"</span><span>,</span> <span>"exit"</span><span>).</span><span>contains</span><span>(</span><span>userInput</span><span>.</span><span>trim</span><span>()))</span> <span>{</span><span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"Assitant: Bye Bye"</span><span>);</span><span>break</span><span>;</span><span>}</span><span>// append the user input as UserMessage</span><span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>UserMessage</span><span>.</span><span>from</span><span>(</span><span>userInput</span><span>));</span><span>var</span> <span>answer</span> <span>=</span> <span>model</span><span>.</span><span>generate</span><span>(</span><span>chatMemory</span><span>);</span><span>// append the model's answer</span><span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>answer</span><span>.</span><span>content</span><span>());</span><span>}</span> <span>catch</span> <span>(</span><span>Exception</span> <span>e</span><span>)</span> <span>{</span><span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Something went wrong"</span><span>);</span><span>break</span><span>;</span><span>}</span><span>}</span><span>while</span> <span>(</span><span>true</span><span>)</span> <span>{</span> <span>try</span> <span>{</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"User: "</span><span>);</span> <span>var</span> <span>userInput</span> <span>=</span> <span>scanner</span><span>.</span><span>nextLine</span><span>();</span> <span>if</span> <span>(</span><span>List</span><span>.</span><span>of</span><span>(</span><span>"q"</span><span>,</span> <span>"quit"</span><span>,</span> <span>"exit"</span><span>).</span><span>contains</span><span>(</span><span>userInput</span><span>.</span><span>trim</span><span>()))</span> <span>{</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"Assitant: Bye Bye"</span><span>);</span> <span>break</span><span>;</span> <span>}</span> <span>// append the user input as UserMessage</span> <span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>UserMessage</span><span>.</span><span>from</span><span>(</span><span>userInput</span><span>));</span> <span>var</span> <span>answer</span> <span>=</span> <span>model</span><span>.</span><span>generate</span><span>(</span><span>chatMemory</span><span>);</span> <span>// append the model's answer</span> <span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>answer</span><span>.</span><span>content</span><span>());</span> <span>}</span> <span>catch</span> <span>(</span><span>Exception</span> <span>e</span><span>)</span> <span>{</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Something went wrong"</span><span>);</span> <span>break</span><span>;</span> <span>}</span> <span>}</span>while (true) { try { System.out.print("User: "); var userInput = scanner.nextLine(); if (List.of("q", "quit", "exit").contains(userInput.trim())) { System.out.print("Assitant: Bye Bye"); break; } // append the user input as UserMessage chatMemory.add(UserMessage.from(userInput)); var answer = model.generate(chatMemory); // append the model's answer chatMemory.add(answer.content()); } catch (Exception e) { System.out.println("Something went wrong"); break; } }

Enter fullscreen mode Exit fullscreen mode

Here, we are using generate method on model by passing the current state of chatMemory list

As you can see we have given the chat bot ability to remember :).

Lets understand tool

Tools like we saw are functions, whose description we can provide to LLM in its desired format. and it can respond with a tool call request if it sees a need

LangChain4j have ToolSpecification class which holds the required structure of a tool description which LLM demands. we can specify that using the builder or some helper methods like ToolSpecifications.toolSpecificationsFrom(Tools.class)

Lets see what its doing under the hood.

-

toolSpecificationsFrommethod inToolSpecificationsuses java reflection to get all methods of the class - filter methods that have a

@Toolannotation - extract the information about parameters, name of method and description provided in

@Tooland buildsList<ToolSpecification>for ourToolsclass.

List<ToolSpecification> is something that Langchain4j understands and it works with the model like this:

<span>var</span> <span>answer</span> <span>=</span> <span>model</span><span>.</span><span>generate</span><span>(</span><span>chatMemory</span><span>,</span> <span>toolSpecifications</span><span>);</span> <span>// we send in toolSpecification </span><span>var</span> <span>answer</span> <span>=</span> <span>model</span><span>.</span><span>generate</span><span>(</span><span>chatMemory</span><span>,</span> <span>toolSpecifications</span><span>);</span> <span>// we send in toolSpecification </span>var answer = model.generate(chatMemory, toolSpecifications); // we send in toolSpecification

Enter fullscreen mode Exit fullscreen mode

Now the request to LLM will include our tool’s specification

Executing the tool

Since we’ve now taken responsibility to set tool specifications we now need to manually run the tool.

<span>var</span> <span>scanner</span> <span>=</span> <span>new</span> <span>Scanner</span><span>(</span><span>System</span><span>.</span><span>in</span><span>);</span><span>while</span> <span>(</span><span>true</span><span>)</span> <span>{</span><span>try</span> <span>{</span><span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"User: "</span><span>);</span><span>var</span> <span>userInput</span> <span>=</span> <span>scanner</span><span>.</span><span>nextLine</span><span>();</span><span>if</span> <span>(</span><span>List</span><span>.</span><span>of</span><span>(</span><span>"q"</span><span>,</span> <span>"quit"</span><span>,</span> <span>"exit"</span><span>).</span><span>contains</span><span>(</span><span>userInput</span><span>.</span><span>trim</span><span>()))</span> <span>{</span><span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"Assitant: Bye Bye"</span><span>);</span><span>break</span><span>;</span><span>}</span><span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>UserMessage</span><span>.</span><span>from</span><span>(</span><span>userInput</span><span>));</span><span>var</span> <span>answer</span> <span>=</span> <span>model</span><span>.</span><span>generate</span><span>(</span><span>chatMemory</span><span>,</span> <span>toolSpecifications</span><span>);</span><span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>answer</span><span>.</span><span>content</span><span>());</span><span>// we check after the response if LLM request a tool call</span><span>if</span> <span>(</span><span>answer</span><span>.</span><span>content</span><span>().</span><span>hasToolExecutionRequests</span><span>())</span> <span>{</span><span>// we iterate on the tool requests, in our case we've only given one tool so its going iterate once</span><span>for</span> <span>(</span><span>var</span> <span>toolReq</span> <span>:</span> <span>answer</span><span>.</span><span>content</span><span>().</span><span>toolExecutionRequests</span><span>())</span> <span>{</span><span>var</span> <span>toolName</span> <span>=</span> <span>toolReq</span><span>.</span><span>name</span><span>();</span><span>// we ensure that only 'searchInternet' tool is requested or not</span><span>if</span><span>(!</span><span>"searchInternet"</span><span>.</span><span>equals</span><span>(</span><span>toolName</span><span>))</span> <span>{</span><span>continue</span><span>;</span><span>}</span><span>// now we extract the input that we must pass to 'searchInternet' method</span><span>var</span> <span>objMapper</span> <span>=</span> <span>new</span> <span>ObjectMapper</span><span>();</span><span>var</span> <span>input</span> <span>=</span> <span>objMapper</span><span>.</span><span>readTree</span><span>(</span><span>toolReq</span><span>.</span><span>arguments</span><span>()).</span><span>get</span><span>(</span><span>"arg0"</span><span>).</span><span>textValue</span><span>();</span><span>// we just access that method directly</span><span>var</span> <span>toolResults</span> <span>=</span> <span>new</span> <span>Tools</span><span>().</span><span>searchInternet</span><span>(</span><span>input</span><span>);</span><span>// since the response is in an Object and we must respond to LLM with a text so we do our little formatting of response from tavily search</span><span>var</span> <span>toolResultContent</span> <span>=</span> <span>writeToolResultsInText</span><span>(</span><span>toolResults</span><span>);</span><span>// Now we append a ToolExecutionResultMessage in our history/memory</span><span>var</span> <span>toolMessage</span> <span>=</span> <span>ToolExecutionResultMessage</span><span>.</span><span>from</span><span>(</span><span>toolReq</span><span>,</span> <span>toolResultContent</span><span>);</span><span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>toolMessage</span><span>);</span><span>// now we again invoke the LLM, see this is that similar automatic request to LLM that LangChain4j did for us in previous section </span><span>// at this moment chatMemory consist of (UserMessage, ToolRequestMessage, ToolExecutionResultMessage)</span><span>var</span> <span>aiAnswerWithToolCall</span> <span>=</span> <span>model</span><span>.</span><span>generate</span><span>(</span><span>chatMemory</span><span>,</span> <span>toolSpecifications</span><span>);</span><span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Assistant (after tool execution): "</span> <span>+</span><span>aiAnswerWithToolCall</span><span>.</span><span>content</span><span>().</span><span>text</span><span>());</span><span>// we now have the AiMessage and appent to chatMemory</span><span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>aiAnswerWithToolCall</span><span>.</span><span>content</span><span>());</span><span>}</span><span>}</span> <span>else</span> <span>{</span><span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Assitant: "</span> <span>+</span> <span>answer</span><span>.</span><span>content</span><span>().</span><span>text</span><span>());</span><span>}</span><span>}</span> <span>catch</span> <span>(</span><span>Exception</span> <span>e</span><span>)</span> <span>{</span><span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Something went wrong"</span><span>);</span><span>break</span><span>;</span><span>}</span><span>}</span><span>private</span> <span>static</span> <span>String</span> <span>writeToolResultsInText</span><span>(</span><span>List</span><span><</span><span>WebSearchOrganicResult</span><span>></span> <span>toolResults</span><span>)</span> <span>{</span><span>return</span> <span>toolResults</span><span>.</span><span>stream</span><span>().</span><span>reduce</span><span>(</span><span>new</span> <span>StringBuilder</span><span>(),</span> <span>((</span><span>s</span><span>,</span> <span>webSearchOrganicResult</span><span>)</span> <span>-></span> <span>{</span><span>s</span><span>.</span><span>append</span><span>(</span><span>"Title: "</span><span>).</span><span>append</span><span>(</span><span>webSearchOrganicResult</span><span>.</span><span>title</span><span>());</span><span>s</span><span>.</span><span>append</span><span>(</span><span>"\n Content: "</span><span>).</span><span>append</span><span>(</span><span>webSearchOrganicResult</span><span>.</span><span>content</span><span>());</span><span>s</span><span>.</span><span>append</span><span>(</span><span>"\n Summary: "</span><span>).</span><span>append</span><span>(</span><span>webSearchOrganicResult</span><span>.</span><span>snippet</span><span>());</span><span>s</span><span>.</span><span>append</span><span>(</span><span>"\n"</span><span>);</span><span>return</span> <span>s</span><span>;</span><span>}),</span> <span>(</span><span>a</span><span>,</span> <span>b</span><span>)</span> <span>-></span> <span>{</span><span>a</span><span>.</span><span>append</span><span>(</span><span>b</span><span>.</span><span>toString</span><span>());</span><span>return</span> <span>a</span><span>;</span><span>}).</span><span>toString</span><span>();</span><span>}</span><span>var</span> <span>scanner</span> <span>=</span> <span>new</span> <span>Scanner</span><span>(</span><span>System</span><span>.</span><span>in</span><span>);</span> <span>while</span> <span>(</span><span>true</span><span>)</span> <span>{</span> <span>try</span> <span>{</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"User: "</span><span>);</span> <span>var</span> <span>userInput</span> <span>=</span> <span>scanner</span><span>.</span><span>nextLine</span><span>();</span> <span>if</span> <span>(</span><span>List</span><span>.</span><span>of</span><span>(</span><span>"q"</span><span>,</span> <span>"quit"</span><span>,</span> <span>"exit"</span><span>).</span><span>contains</span><span>(</span><span>userInput</span><span>.</span><span>trim</span><span>()))</span> <span>{</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>print</span><span>(</span><span>"Assitant: Bye Bye"</span><span>);</span> <span>break</span><span>;</span> <span>}</span> <span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>UserMessage</span><span>.</span><span>from</span><span>(</span><span>userInput</span><span>));</span> <span>var</span> <span>answer</span> <span>=</span> <span>model</span><span>.</span><span>generate</span><span>(</span><span>chatMemory</span><span>,</span> <span>toolSpecifications</span><span>);</span> <span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>answer</span><span>.</span><span>content</span><span>());</span> <span>// we check after the response if LLM request a tool call</span> <span>if</span> <span>(</span><span>answer</span><span>.</span><span>content</span><span>().</span><span>hasToolExecutionRequests</span><span>())</span> <span>{</span> <span>// we iterate on the tool requests, in our case we've only given one tool so its going iterate once</span> <span>for</span> <span>(</span><span>var</span> <span>toolReq</span> <span>:</span> <span>answer</span><span>.</span><span>content</span><span>().</span><span>toolExecutionRequests</span><span>())</span> <span>{</span> <span>var</span> <span>toolName</span> <span>=</span> <span>toolReq</span><span>.</span><span>name</span><span>();</span> <span>// we ensure that only 'searchInternet' tool is requested or not</span> <span>if</span><span>(!</span><span>"searchInternet"</span><span>.</span><span>equals</span><span>(</span><span>toolName</span><span>))</span> <span>{</span> <span>continue</span><span>;</span> <span>}</span> <span>// now we extract the input that we must pass to 'searchInternet' method</span> <span>var</span> <span>objMapper</span> <span>=</span> <span>new</span> <span>ObjectMapper</span><span>();</span> <span>var</span> <span>input</span> <span>=</span> <span>objMapper</span><span>.</span><span>readTree</span><span>(</span><span>toolReq</span><span>.</span><span>arguments</span><span>()).</span><span>get</span><span>(</span><span>"arg0"</span><span>).</span><span>textValue</span><span>();</span> <span>// we just access that method directly</span> <span>var</span> <span>toolResults</span> <span>=</span> <span>new</span> <span>Tools</span><span>().</span><span>searchInternet</span><span>(</span><span>input</span><span>);</span> <span>// since the response is in an Object and we must respond to LLM with a text so we do our little formatting of response from tavily search</span> <span>var</span> <span>toolResultContent</span> <span>=</span> <span>writeToolResultsInText</span><span>(</span><span>toolResults</span><span>);</span> <span>// Now we append a ToolExecutionResultMessage in our history/memory</span> <span>var</span> <span>toolMessage</span> <span>=</span> <span>ToolExecutionResultMessage</span><span>.</span><span>from</span><span>(</span><span>toolReq</span><span>,</span> <span>toolResultContent</span><span>);</span> <span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>toolMessage</span><span>);</span> <span>// now we again invoke the LLM, see this is that similar automatic request to LLM that LangChain4j did for us in previous section </span> <span>// at this moment chatMemory consist of (UserMessage, ToolRequestMessage, ToolExecutionResultMessage)</span> <span>var</span> <span>aiAnswerWithToolCall</span> <span>=</span> <span>model</span><span>.</span><span>generate</span><span>(</span><span>chatMemory</span><span>,</span> <span>toolSpecifications</span><span>);</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Assistant (after tool execution): "</span> <span>+</span> <span>aiAnswerWithToolCall</span><span>.</span><span>content</span><span>().</span><span>text</span><span>());</span> <span>// we now have the AiMessage and appent to chatMemory</span> <span>chatMemory</span><span>.</span><span>add</span><span>(</span><span>aiAnswerWithToolCall</span><span>.</span><span>content</span><span>());</span> <span>}</span> <span>}</span> <span>else</span> <span>{</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Assitant: "</span> <span>+</span> <span>answer</span><span>.</span><span>content</span><span>().</span><span>text</span><span>());</span> <span>}</span> <span>}</span> <span>catch</span> <span>(</span><span>Exception</span> <span>e</span><span>)</span> <span>{</span> <span>System</span><span>.</span><span>out</span><span>.</span><span>println</span><span>(</span><span>"Something went wrong"</span><span>);</span> <span>break</span><span>;</span> <span>}</span> <span>}</span> <span>private</span> <span>static</span> <span>String</span> <span>writeToolResultsInText</span><span>(</span><span>List</span><span><</span><span>WebSearchOrganicResult</span><span>></span> <span>toolResults</span><span>)</span> <span>{</span> <span>return</span> <span>toolResults</span><span>.</span><span>stream</span><span>().</span><span>reduce</span><span>(</span><span>new</span> <span>StringBuilder</span><span>(),</span> <span>((</span><span>s</span><span>,</span> <span>webSearchOrganicResult</span><span>)</span> <span>-></span> <span>{</span> <span>s</span><span>.</span><span>append</span><span>(</span><span>"Title: "</span><span>).</span><span>append</span><span>(</span><span>webSearchOrganicResult</span><span>.</span><span>title</span><span>());</span> <span>s</span><span>.</span><span>append</span><span>(</span><span>"\n Content: "</span><span>).</span><span>append</span><span>(</span><span>webSearchOrganicResult</span><span>.</span><span>content</span><span>());</span> <span>s</span><span>.</span><span>append</span><span>(</span><span>"\n Summary: "</span><span>).</span><span>append</span><span>(</span><span>webSearchOrganicResult</span><span>.</span><span>snippet</span><span>());</span> <span>s</span><span>.</span><span>append</span><span>(</span><span>"\n"</span><span>);</span> <span>return</span> <span>s</span><span>;</span> <span>}),</span> <span>(</span><span>a</span><span>,</span> <span>b</span><span>)</span> <span>-></span> <span>{</span> <span>a</span><span>.</span><span>append</span><span>(</span><span>b</span><span>.</span><span>toString</span><span>());</span> <span>return</span> <span>a</span><span>;</span> <span>}).</span><span>toString</span><span>();</span> <span>}</span>var scanner = new Scanner(System.in); while (true) { try { System.out.print("User: "); var userInput = scanner.nextLine(); if (List.of("q", "quit", "exit").contains(userInput.trim())) { System.out.print("Assitant: Bye Bye"); break; } chatMemory.add(UserMessage.from(userInput)); var answer = model.generate(chatMemory, toolSpecifications); chatMemory.add(answer.content()); // we check after the response if LLM request a tool call if (answer.content().hasToolExecutionRequests()) { // we iterate on the tool requests, in our case we've only given one tool so its going iterate once for (var toolReq : answer.content().toolExecutionRequests()) { var toolName = toolReq.name(); // we ensure that only 'searchInternet' tool is requested or not if(!"searchInternet".equals(toolName)) { continue; } // now we extract the input that we must pass to 'searchInternet' method var objMapper = new ObjectMapper(); var input = objMapper.readTree(toolReq.arguments()).get("arg0").textValue(); // we just access that method directly var toolResults = new Tools().searchInternet(input); // since the response is in an Object and we must respond to LLM with a text so we do our little formatting of response from tavily search var toolResultContent = writeToolResultsInText(toolResults); // Now we append a ToolExecutionResultMessage in our history/memory var toolMessage = ToolExecutionResultMessage.from(toolReq, toolResultContent); chatMemory.add(toolMessage); // now we again invoke the LLM, see this is that similar automatic request to LLM that LangChain4j did for us in previous section // at this moment chatMemory consist of (UserMessage, ToolRequestMessage, ToolExecutionResultMessage) var aiAnswerWithToolCall = model.generate(chatMemory, toolSpecifications); System.out.println("Assistant (after tool execution): " + aiAnswerWithToolCall.content().text()); // we now have the AiMessage and appent to chatMemory chatMemory.add(aiAnswerWithToolCall.content()); } } else { System.out.println("Assitant: " + answer.content().text()); } } catch (Exception e) { System.out.println("Something went wrong"); break; } } private static String writeToolResultsInText(List<WebSearchOrganicResult> toolResults) { return toolResults.stream().reduce(new StringBuilder(), ((s, webSearchOrganicResult) -> { s.append("Title: ").append(webSearchOrganicResult.title()); s.append("\n Content: ").append(webSearchOrganicResult.content()); s.append("\n Summary: ").append(webSearchOrganicResult.snippet()); s.append("\n"); return s; }), (a, b) -> { a.append(b.toString()); return a; }).toString(); }

Enter fullscreen mode Exit fullscreen mode

Alright. we now have done a pretty raw implementation of the whole flow. Lets try it out:

Thanks for reading, and happy learning!

原文链接:Creating a simple web search enabled chat bot using LangChain4j

![图片[3]-Creating a simple web search enabled chat bot using LangChain4j - 拾光赋-拾光赋](https://media2.dev.to/dynamic/image/width=800%2Cheight=%2Cfit=scale-down%2Cgravity=auto%2Cformat=auto/https%3A%2F%2Fdev-to-uploads.s3.amazonaws.com%2Fuploads%2Farticles%2Fampbosr83f35o5mguqve.png)

暂无评论内容